Welcome back, technology enthusiasts! In this article, we’ll delve into the fascinating world of distributed word representations and explore various reweighting schemes. These schemes aim to amplify important associations while de-emphasizing mundane or erroneous ones, ultimately enhancing semantic information extraction.

Contents

Importance of Reweighting

Distributed word representations offer a powerful way to capture semantic relationships between words. However, relying solely on raw frequency counts can yield suboptimal results. Frequency alone often fails to capture the nuanced semantic information we seek.

To address this limitation, reweighting schemes have been developed with the goal of refining the underlying counts. These schemes aim to amplify meaningful associations while reducing the impact of noise and errors in the data.

Normalization: A Basic Scheme

One of the simplest reweighting schemes is normalization. This approach pays attention only to the row context of a given word. By calculating the L2 length of each row vector and normalizing it, we can ensure that each value in the vector is divided by that fixed quantity. This process helps to capture important associations within the row context.

Observed over Expected: A Holistic Approach

To take a more comprehensive approach to reweighting, we need to consider both row and column contexts. One such scheme is called “Observed over Expected”. This scheme calculates the expected value for each cell, assuming independence between the row and column. By comparing the observed count to the expected count, we can measure the degree of association between words.

Pointwise Mutual Information (PMI)

PMI is a popular reweighting scheme that considers both row and column contexts. It calculates the observed count over the expected count and takes the logarithm of the result. PMI is designed to amplify values that are larger than expected and deemphasize smaller values. PMI can be a powerful method for extracting meaningful semantic information from word representations.

Positive PMI: A Refined Approach

Positive PMI is a variant of PMI that addresses the issue of undefined values when the count is zero. Instead of treating those values as neutral, positive PMI maps all negative values to zero. This modification ensures that positive PMI values reflect the amplification of larger-than-expected counts, while smaller values are set to zero. Positive PMI provides a more coherent and meaningful perspective on the reweighted values.

Evaluating Reweighting Schemes

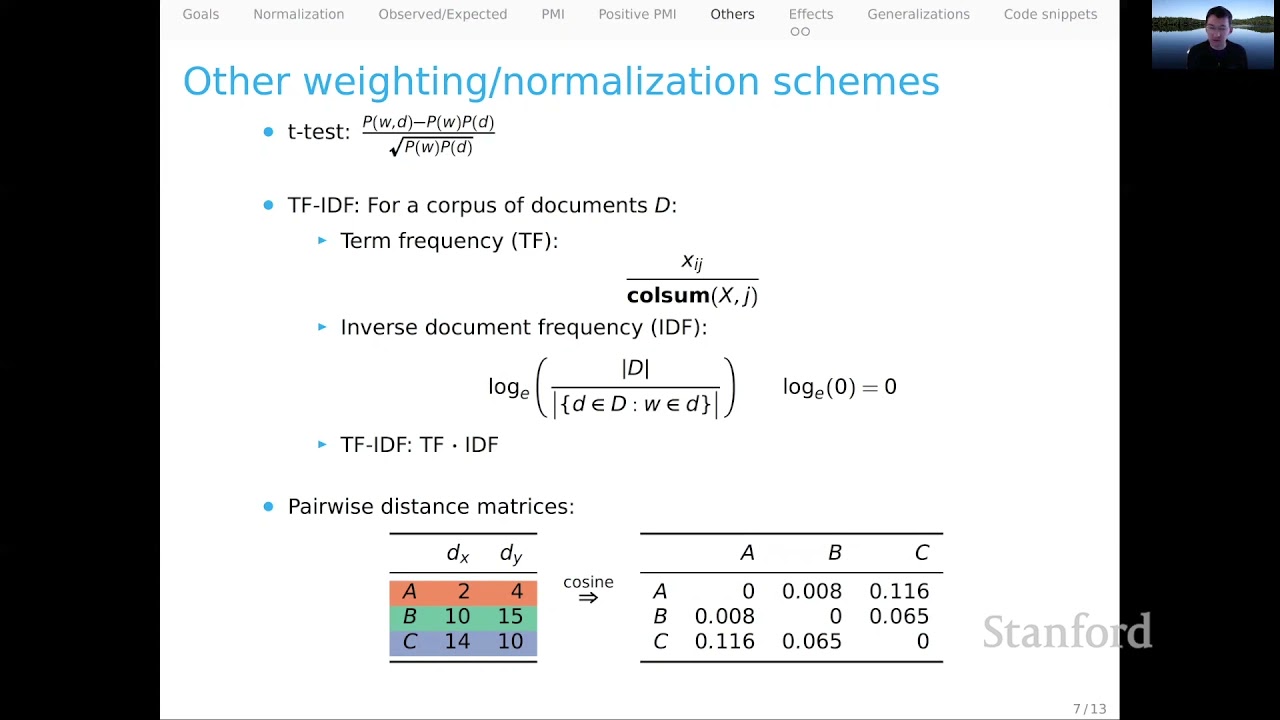

When evaluating reweighting schemes, it is crucial to assess how they compare to raw counts and the distribution of their values. Many schemes, such as L2 norming and probability distributions, provide a constrained range of values but still exhibit a heavy skew towards small values. Observed over expected and TF-IDF have larger ranges, but their distributions may not be suitable for downstream applications. PMI and positive PMI strike a balance between range and skew, offering meaningful values and distributions.

Final Thoughts

Reweighting word representations is a vital step in uncovering the rich semantic information contained within them. By applying various reweighting schemes, such as normalization, observed over expected, PMI, and positive PMI, we can enhance the associations between words and refine their semantic representations.

Remember, as technology engineers, it is essential to consider the specific use case and desired outcomes when choosing a reweighting scheme. Each scheme has its strengths and limitations, so it’s important to experiment and find the best approach for your particular application.

Stay tuned for more insightful articles from Techal, your trusted source for all things technology-related. And don’t forget to visit Techal for the latest tech news, guides, and resources.

FAQs

Q: Why is reweighting important for word representations?

A: Reweighting helps to amplify important associations and reduce the impact of noise or errors in the data, enhancing the semantic information extracted from word representations.

Q: What is the difference between PMI and positive PMI?

A: PMI calculates the log of the observed count over the expected count, while positive PMI maps negative values to zero, ensuring a more coherent distribution of values.

Q: Which reweighting scheme should I choose for my application?

A: The choice of reweighting scheme depends on the specific use case and desired outcomes. It is essential to experiment and find the scheme that best aligns with your goals.

Conclusion

In this article, we explored various reweighting schemes for enhancing word representations. We discussed techniques such as normalization, observed over expected, PMI, and positive PMI. Each scheme offers a unique perspective on capturing semantic associations and refining the underlying counts.

Remember, by carefully selecting the appropriate reweighting scheme, you can unlock the potential of word representations to extract meaningful and accurate semantic information. Keep experimenting and exploring new approaches to stay at the forefront of technology advancements.

Stay curious and keep learning!