Adversarial testing is an exciting approach that can reveal valuable insights about the systems we develop in the field of Natural Language Processing (NLP). While traditional evaluation methods in NLP involve creating a dataset from a single homogeneous process and assessing system performance based on a held-out test set, adversarial testing takes a different approach. By creating challenging test sets that are distinct from the original data used for system development, we can gain a better understanding of how our systems perform in the real world.

Contents

Understanding Adversarial Testing

In adversarial testing, a new dataset is created, and the system is developed and assessed using this dataset. The crucial step is to develop a new test set of examples that are expected to be challenging for the system, given its training on the original dataset. After completing the system development, an evaluation is conducted on the new test dataset, usually based on accuracy or a similar metric. The results of this evaluation provide an estimate of the system’s capacity to generalize to new experiences.

Adversarial testing can help bridge the gap between the homogeneous process used to create the original dataset and the complexity of the real world. It allows us to assess how well our systems perform on examples that they are likely to encounter in real-world deployments.

The Evolution of Adversarial Testing

Adversarial testing has its roots in the Turing test, where a computer attempts to deceive a human into thinking it is human. It also draws inspiration from the concept of constructing examples that stress test systems by challenging their understanding of the world and the complexity of language.

Building on these ideas, researchers have continued to develop and systematize adversarial testing. They have explored creating challenge problems to assess system understanding of specific phenomena or evaluating how systems handle new styles or genres. These adversarial tests aim to push the boundaries and reveal the limitations of the systems we develop.

the Power of Adversarial Testing

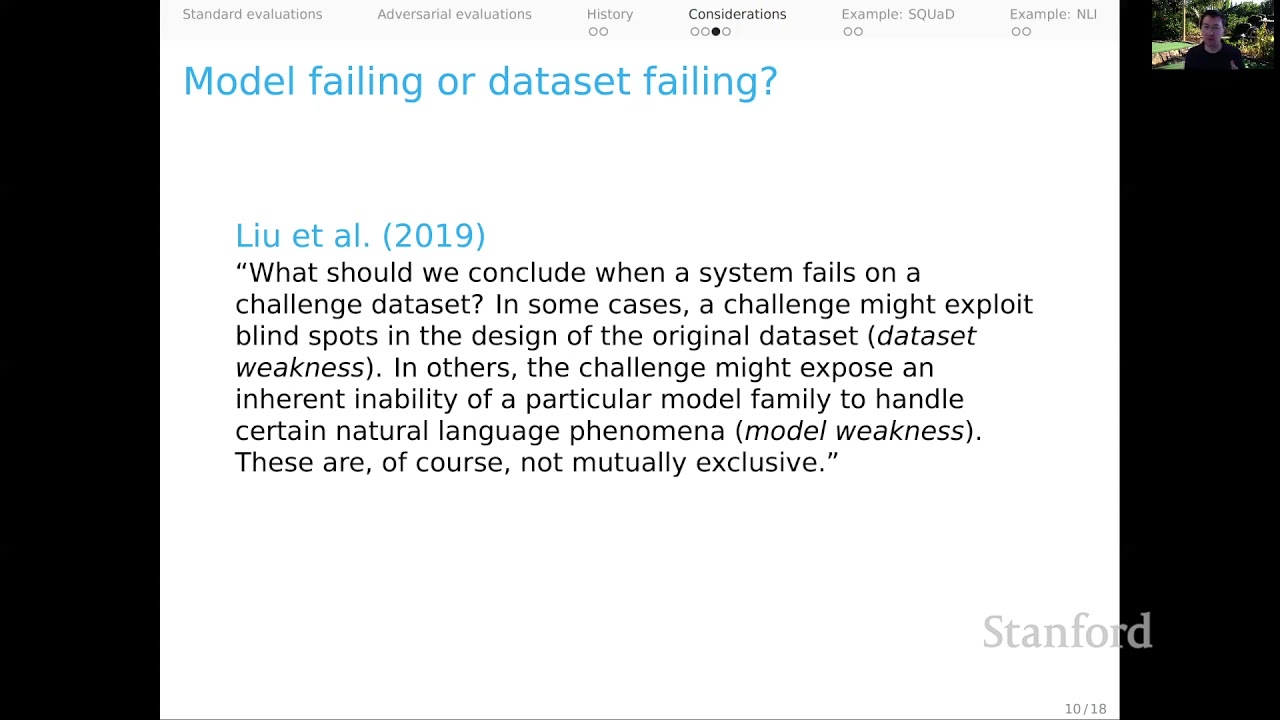

Adversarial testing can provide valuable insights into the strengths and weaknesses of our systems. By incorporating adversarial evaluations, we can distinguish between dataset weaknesses and model weaknesses. Dataset weaknesses can be addressed by supplementing the training data with examples that cover relevant scenarios. On the other hand, model weaknesses highlight inherent limitations in the approaches we are taking.

To determine whether a system failure in an adversarial test is due to a dataset weakness or a model weakness, researchers have proposed the technique of inoculation by fine-tuning. This involves fine-tuning the system on a few challenge examples and retesting its performance on both the original test set and the held-out parts of the challenge test set. The outcomes of this process provide insights into the underlying weaknesses and guide future improvements.

Application of Adversarial Testing

Adversarial testing has been applied to various domains, including question-answering systems and natural language inference (NLI) models.

In the realm of question-answering, adversarial testing has revealed system vulnerabilities to misleading sentences appended to passages. Systems were easily misled by these additional sentences, even though humans could easily ignore them. This demonstrates the need to develop systems that can distinguish between relevant information and potentially misleading cues.

In the field of NLI, adversarial testing has highlighted the limitations of systems that perform well on standard datasets but struggle with adversarial examples. Modifications to hypotheses in NLI tasks have shown that systems often do not exhibit systematic behavior according to human intuitions. However, more recent models like RoBERTA have shown promising results, indicating a more comprehensive understanding of lexical relationships.

Harnessing the Potential of Adversarial Testing

Adversarial testing has emerged as a powerful tool for evaluating the capabilities of NLP systems. By challenging systems with carefully designed tests, we can uncover their strengths and weaknesses, enabling us to improve their performance and robustness.

As technology enthusiasts and engineers, we must embrace adversarial testing to enhance the reliability of our systems. By conducting thoughtful evaluations and addressing both dataset weaknesses and model weaknesses, we can push the boundaries of system performance and develop more capable and robust NLP solutions.

FAQs

Q: What is adversarial testing in NLP?

A: Adversarial testing involves creating a new dataset and developing and assessing NLP systems using this dataset. The test set consists of examples that challenge the system’s capabilities, allowing us to evaluate its performance in handling new scenarios.

Q: What can adversarial testing tell us?

A: Adversarial testing can provide valuable insights into the strengths and weaknesses of NLP systems. It helps us distinguish between dataset weaknesses and model weaknesses, guiding improvements in system performance and robustness.

Q: How can we address dataset weaknesses identified through adversarial testing?

A: Dataset weaknesses can be addressed by supplementing the training data with examples that cover the relevant scenarios highlighted in the adversarial test. This provides the system with a more comprehensive understanding of different phenomena and improves its performance on challenging examples.

Q: What are the benefits of conducting adversarial testing in NLP?

A: Adversarial testing allows us to evaluate the real-world performance of NLP systems by challenging them with scenarios beyond the original training data. This helps bridge the gap between system capabilities and the complexity of the real world, enabling us to build more robust and reliable NLP solutions.

Conclusion

Adversarial testing is a powerful tool for evaluating NLP systems and uncovering their strengths and weaknesses. By challenging systems with new and challenging examples, we can enhance their robustness and performance. Adversarial testing allows us to push the boundaries of our systems’ capabilities and develop NLP solutions that can thrive in real-world scenarios.

To learn more about technology and its advancements, visit Techal.org.