Welcome to Techal, your go-to source for all things technology. In today’s article, we will dive into the architecture of recurrent neural networks (RNNs) and explore the concept of forward propagation over time. RNNs play a crucial role in various applications, particularly in natural language processing (NLP) tasks like sentiment analysis. By the end of this tutorial, you’ll have a clear understanding of how RNNs work and how they maintain sequence information.

Contents

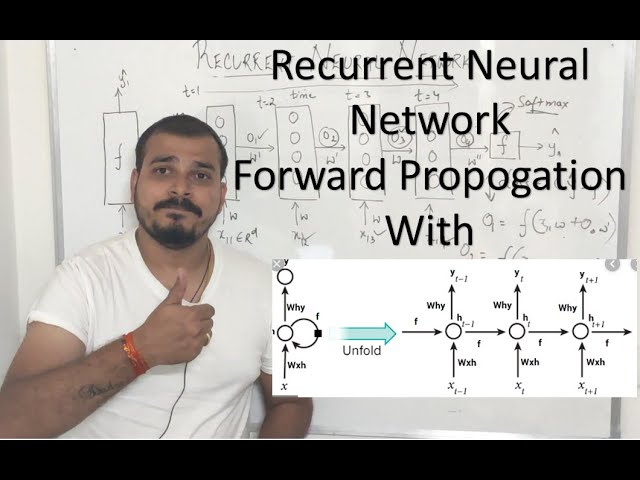

Recurrent Neural Network Architecture

An RNN consists of three main components: an input layer, a hidden layer, and an output layer. The input layer can have any number of dimensions, representing various features. The hidden layer contains multiple neurons, and the output layer produces the final output. Additionally, RNNs also generate outputs at each time step, ensuring that sequence information is preserved.

Forward Propagation Over Time

Forward propagation refers to the process of passing input data through the neural network to generate the desired output. In the context of RNNs, forward propagation occurs over time, taking into account the sequential nature of the data.

Let’s break down the steps involved in forward propagation over time:

Step 1: Preprocessing

Each input word in a sentence is represented as a vector in a high-dimensional space, typically using techniques like word2vec, tf-idf, or bag-of-words. These word vectors are then passed through the input layer.

In the hidden layer, the input data is multiplied by weights specific to each neuron. The resulting values are then passed through an activation function, such as ReLU, tanh, or sigmoid. This step is performed for each time step.

Step 3: Output Computation

The outputs from the hidden layer are fed back into the same set of neurons, ensuring that sequence information is preserved. This process continues for each time step.

Step 4: Final Output and Activation

After all the time steps are processed, the final output is passed through a softmax activation function, especially in tasks like sentiment analysis that require classification. The softmax function assigns probabilities to each class, enabling the model to make predictions.

Understanding Sequence Information Preservation

A crucial aspect of RNNs is their ability to retain sequence information. As we go through each time step, the output from the previous time step is fed back into the hidden layer. This feedback ensures that the model considers information from earlier time steps when processing subsequent inputs.

To illustrate this, let’s consider an example:

Suppose we have a sentence, “This food is bad.” To determine the sentiment of this review, we process each word sequentially. At the first time step, “This” is passed through the network, producing an output. This output is then fed into the hidden layer along with the second word, “food.” The same process is repeated for each subsequent word.

In this way, the sequence information is maintained throughout the forward propagation process. This is a significant advantage over techniques like bag-of-words or tf-idf, where sequence information is discarded.

Conclusion

In this tutorial, we explored the architecture of recurrent neural networks (RNNs) and the concept of forward propagation over time. RNNs are powerful models that excel in tasks involving sequential data, such as natural language processing. By maintaining sequence information, RNNs can capture dependencies and patterns in the data, enabling them to make accurate predictions.

We hope you found this tutorial helpful and gained a deeper understanding of RNNs. Stay tuned for more informative articles on the fascinating world of technology!

FAQs

Q: What is the purpose of forward propagation in recurrent neural networks?

A: Forward propagation in recurrent neural networks refers to the process of passing input data through the network to generate the desired output. It takes into account the sequential nature of the data and ensures that sequence information is preserved.

Q: How does forward propagation in recurrent neural networks maintain sequence information?

A: The forward propagation process in recurrent neural networks maintains sequence information by feeding back the output from each time step into the hidden layer. This allows the model to consider information from previous time steps when processing subsequent inputs.

Q: What are the applications of recurrent neural networks?

A: Recurrent neural networks are widely used in natural language processing tasks such as sentiment analysis, language translation, speech recognition, and text generation. They are also applied in time series analysis, image captioning, and data forecasting.

For more information about recurrent neural networks and other exciting topics in the world of technology, visit Techal.