Neural Information Retrieval (IR) has revolutionized the field of search by significantly improving the quality of search results. In this article, we will explore the concept of neural IR and its impact on search engines.

Contents

Query-Document Interaction: The Key to Neural IR

One effective paradigm for building neural IR models is query-document interaction. This approach involves tokenizing the query and document and embedding them into static vector representations. The next step is to create a query-document interaction matrix, which calculates the cosine similarities between each word in the query and the document. By reducing this matrix to a single score using neural layers like convolutional or linear layers with pooling, we can estimate the relevance of the document to the query.

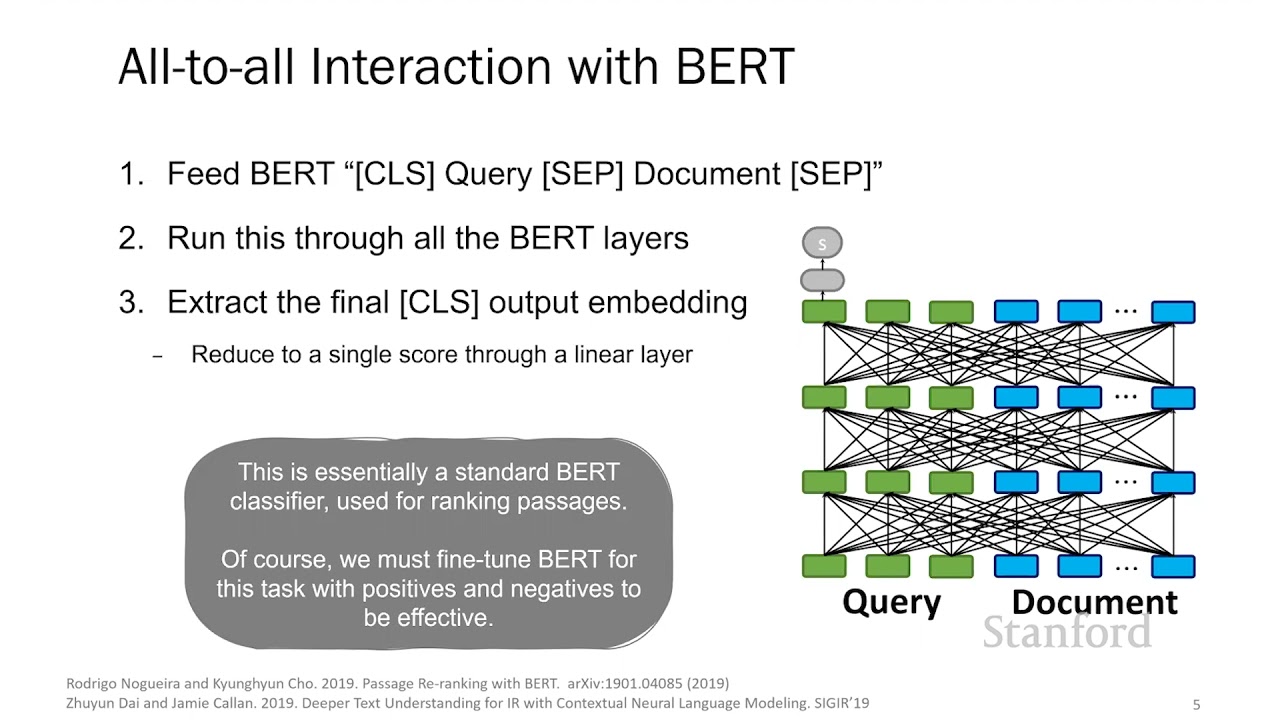

Harnessing the Power of BERT for Ranking

The discovery of BERT (Bidirectional Encoder Representations from Transformers) in 2019 brought about a new era in search. BERT allows us to feed the query and document as one sequence with two segments into the model. Through fine-tuning and a final linear head on top of BERT, we can extract the class token embedding and derive a single score. This score is then used to rank the passages. Nogueira and Cho demonstrated the dramatic gains of BERT-based models in the MS MARCO Passage Ranking task, surpassing the previous state of the art approaches. However, these advancements come at a price — increased computational cost and latency.

Balancing Quality and Latency: The Quest for Better Trade-offs

To achieve high Mean Reciprocal Rank (MRR) and low latency simultaneously, researchers explored various approaches. One observation was that BERT rankers are computationally redundant, requiring repeated computations for each document and query. To address this, a potential solution is precomputing document representations using models like BERT and storing them for future retrieval. By doing so, we can significantly reduce the computational workload and speed up query answering.

Learning Term Weights: Taming BERT’s Latency

Learning term weights is another approach to mitigate the computational latency of BERT for IR. By tokenizing the document and using a linear layer, we can project each token into a single numeric score. These document term weights are then stored in an inverted index, allowing for quick retrieval during query answering. This approach builds on the strength of BERT in learning stronger term weights than traditional models like BM25. Models like DeepCT and doc2query have shown promising results in this efficient paradigm. However, a drawback is that the query reverts to a Bag-of-Words representation, losing deeper understanding.

FAQs

-

What is neural IR?

Neural IR is an approach that uses neural networks to improve the effectiveness of information retrieval systems by estimating the relevance of documents to a given query. -

How does BERT improve search quality?

BERT allows for the encoding of both the query and the document into a single representation, enabling more accurate ranking of search results. -

What are the challenges in neural IR?

One challenge is finding a balance between quality and latency. While neural models like BERT deliver high-quality results, they often come with increased computational cost and latency. -

How can we reduce the computational workload in BERT-based models?

One approach is to precompute document representations using models like BERT and store them for quick retrieval during query answering.

Conclusion

Neural IR has transformed the search landscape, empowering search engines to provide more relevant results. Models like BERT have demonstrated remarkable improvements in search quality. However, achieving a balance between quality and latency remains a challenge. By exploring innovative approaches, such as precomputing document representations and learning term weights, researchers continue to make strides towards achieving high MRR and low computational cost in neural IR models.

Visit Techal.org for more insightful articles on technology.