Welcome back to our series on Contextual Word Representations. In this installment, we delve into RoBERTa, a robustly optimized BERT approach. Inspired by the limitations of the original BERT model, the RoBERTa team set out to explore the uncharted territories in this space.

Contents

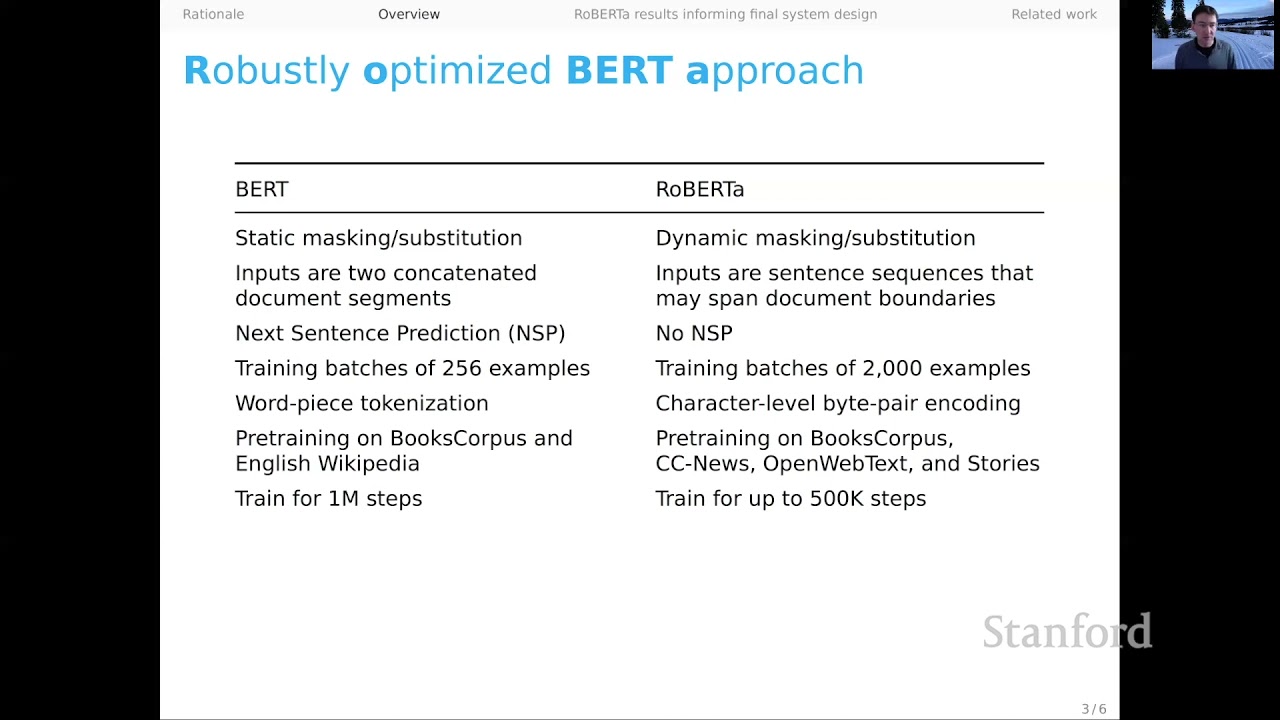

Static vs. Dynamic Masking

One of the central differences between BERT and RoBERTa lies in their approach to masking. While BERT created four copies of their dataset with different masking, RoBERTa adopted dynamic masking. Each example presented to the model is masked in a potentially different way using a random function. This injection of diversity in the training process proved useful and led to improved performance.

Changes in Example Presentation

BERT utilized concatenated document segments, crucial for its next sentence prediction task. In contrast, RoBERTa simplifies the example presentation by using sentence sequences (pairs), which can even span document boundaries. Additionally, RoBERTa drops the next sentence prediction task, streamlining the modeling objective.

Training Batches and Tokenization

RoBERTa significantly scaled up the training process. It increased the batch size from 256 in BERT to a whopping 2000. Furthermore, RoBERTa adopted a simplified tokenization approach, using character-level byte-pair encoding instead of the word piece tokenization employed by BERT. This change intuitively results in more word pieces.

Training Data and Steps

RoBERTa trained on a range of corpora, including BooksCorpus, CC-News corpus, OpenWebText corpus, and Stories corpus, significantly increasing the amount of training data. Although RoBERTa was trained for 500,000 steps compared to BERT’s 1 million steps, the larger batch sizes compensate for the reduced number of steps.

Optimizer and Data Presentation

Beyond the mentioned differences, RoBERTa introduces various other changes related to the optimizer and data presentation. For a detailed understanding of these aspects, we encourage you to refer to section 3.1 of the RoBERTa paper.

Evidence and Performance

RoBERTa’s dynamic masking approach proved to be consistently better across multiple benchmarks, including SQuAD, MNLI, and SST-2. The choices made in presenting examples also played a crucial role. While the DOC-SENTENCES approach yielded better numerical results, RoBERTa adopted the FULL-SENTENCES approach for ease of creating batches of the same size.

Moreover, the batch size of 2K was identified as the optimal size for RoBERTa, striking a balance between different benchmarks and pseudo-perplexity values. By increasing the amount of training, RoBERTa achieved better performance compared to BERT.

Conclusion

Although RoBERTa represents a significant advancement in contextual word representations, it is essential to recognize that it only scratches the surface of the vast potential design choices in this field. For a deeper dive into the world of models like BERT and RoBERTa, we highly recommend reading “The Primer in BERTology,” a comprehensive paper that offers additional wisdom and insights.

If you want to stay up-to-date with the latest advancements in technology, don’t forget to visit Techal for more informative articles and guides.

FAQs

Q: What is the main difference between BERT and RoBERTa?

A: One of the main differences is RoBERTa’s use of dynamic masking instead of BERT’s static masking. RoBERTa also presents examples differently and simplifies tokenization.

Q: Does dynamic masking improve performance?

A: Yes, according to benchmarks, dynamic masking consistently outperforms static masking across multiple tasks.

Q: How does RoBERTa increase the amount of training data?

A: RoBERTa trained on multiple corpora, including BooksCorpus, CC-News corpus, OpenWebText corpus, and Stories corpus, significantly increasing the amount of training data compared to BERT.

Q: Why does RoBERTa use character-level byte-pair encoding for tokenization?

A: RoBERTa simplifies tokenization compared to BERT by using character-level byte-pair encoding. This approach leads to more word pieces and a more intuitive tokenization process.

Conclusion

RoBERTa represents a major breakthrough in contextual word representations. By exploring novel approaches and optimizing various aspects, RoBERTa outperforms its predecessor in multiple benchmarks. However, the field of contextual word representations continues to evolve, offering immense potential for further exploration and advancements.

Stay tuned to Techal for more articles and updates on the ever-evolving world of technology.