Welcome aboard, fellow knowledge seekers! Prepare to embark on an exciting journey through the depths of linear regression. Today, we’ll be unlocking the secrets behind this powerful statistical technique that has captured the hearts of data analysts and researchers alike.

Before we dive in, allow me to introduce myself. I’m an ardent tech enthusiast with over a decade of experience in the world of information technology. I specialize in search engine optimization, emotional writing, and all things related to the wondrous field of IT. And today, I’ll be your guide on this captivating adventure into the realm of linear regression.

But first, let me paint a vivid picture for you. Imagine sailing on a boat, cruising towards a place called “Stat Quest.” The mere mention of this destination fills your heart with anticipation. It’s like a secret oasis, hidden away in the depths of knowledge and expertise. You’ve heard whispers of its wonders, and now, you’re about to witness them firsthand.

At Stat Quest, the friendly folks at the University of North Carolina’s genetics department have crafted a comprehensive series on linear regression. It’s called “Linear Regression, AKA General Linear Models – Part One.” Buckle up, my friends, because this concept is about to blow your mind.

Contents

- The Art of Fitting a Line: Unveiling the Power of Least Squares

- Unveiling the Mysteries of Mouse Size Prediction

- Unleashing the Power of Least Squares: Fitting the Perfect Line

- The Secrets of r Squared: Unveiling the Power of Variation

- The Magnificence of r Squared: Unveiling the Mysteries of Variation

- Exploring the Limitations of r Squared: When Mouse Weight Falls Short

- Conclusion: A Journey of Exploration and Discovery

The Art of Fitting a Line: Unveiling the Power of Least Squares

Picture this: you’re faced with a vast dataset, brimming with information. Your goal? To find the relationship between two variables – let’s say, mouse weight and mouse size. But how do you go about it? Fear not, my friends, for the answer lies in the magic of least squares.

In the enchanting world of linear regression, the first step is to use least squares to fit a line to the data. It’s like finding the perfect puzzle piece that seamlessly connects the dots. The line that emerges becomes a guiding light, illuminating the relationship between mouse weight and mouse size.

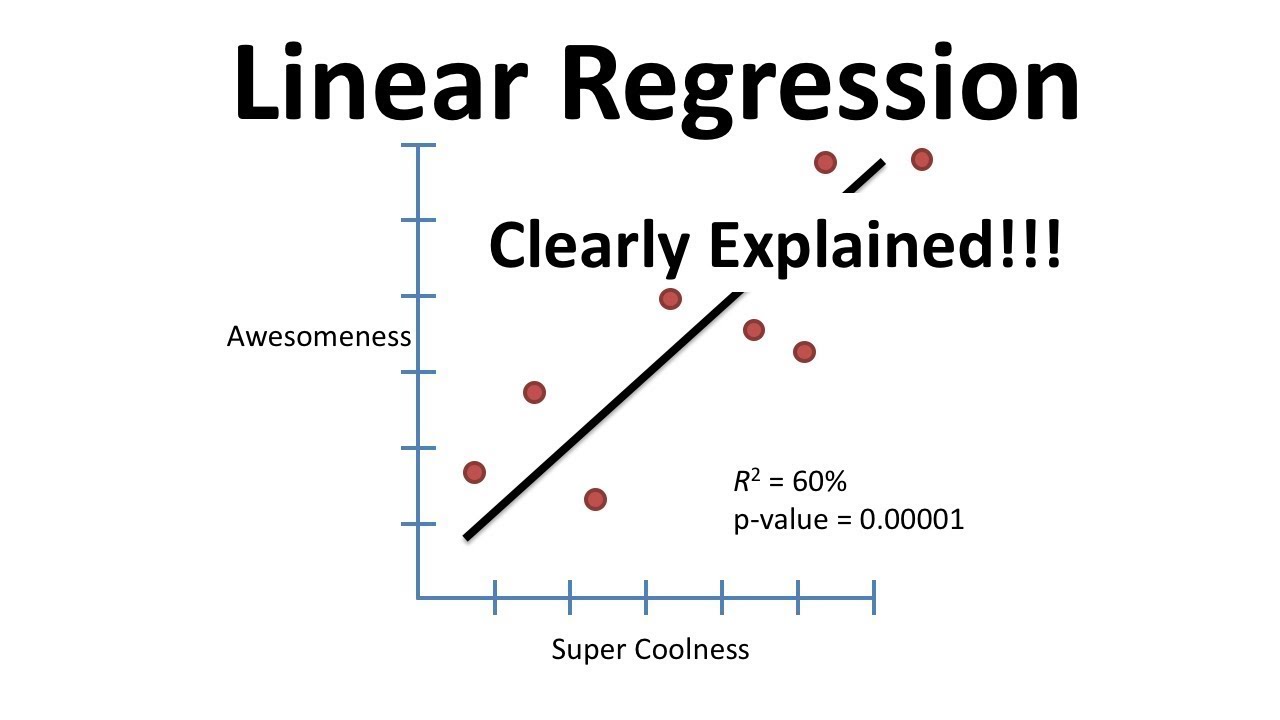

But we can’t stop there. The next step is to calculate a metric called r squared. It’s like a magnifying glass, scrutinizing the accuracy of our line. How well does it predict the mouse size based on weight? R squared reveals the answer, providing a measure of the explained variation in mouse size.

And here’s where things get even more fascinating. We can even determine the p-value for r squared. This remarkable little number tells us if the relationship we observed is statistically significant. It’s the litmus test that separates the meaningful from the trivial.

Unveiling the Mysteries of Mouse Size Prediction

Now, let’s take a moment to delve into the intricacies of fitting a line to data. In our little mouse world, we have a dataset that features the weight and size of several mice. Our mission? To use mouse weight as a predictor for mouse size.

So, we begin by drawing a line through the data, connecting the weight and size measurements. But we don’t stop there. We calculate the residuals, which represent the distances between the line and each data point. By squaring these residuals and summing them up, we get a measure of the overall “fit” of our line.

But the adventure doesn’t end there. We rotate the line slightly, recalculating the residuals and summing up their squares once again. We repeat this process, each rotation unveiling a new perspective on the relationship between weight and size.

As we chart our course through the rotations, we create a graph. On the y-axis, we have the sum of squared residuals, representing the “fit” of our line. On the x-axis, we have the different rotations. And then, like a compass guiding us to our destination, we find the rotation with the least sum of squares.

Unleashing the Power of Least Squares: Fitting the Perfect Line

Behold, fellow adventurers, for we have discovered the power of least squares! Our line fitting process has led us to the ultimate revelation – the line that best aligns with our data.

With this newfound knowledge, we proudly present our least squares rotation, superimposed on the original data. It’s like a eureka moment that solidifies our understanding of why the method is called “least squares.” We’ve accomplished the fitting of a line, and it’s truly awe-inspiring.

But wait, there’s more! Let’s unravel the equation for our magnificent line. Least squares estimation blesses us with two critical parameters – the y-axis intercept and the slope. These values hold the key to unlocking the relationship between mouse weight and mouse size.

And here’s the exciting part: the non-zero slope signifies that mouse weight can help us predict a mouse’s size. Isn’t that incredible? The weight of a mouse becomes a vital clue that guides us towards unraveling its size. Oh, the wonders of linear regression!

The Secrets of r Squared: Unveiling the Power of Variation

Now that we have fitted our line, it’s time to embark on a quest to comprehend the concept of r squared. Brace yourselves, my friends, for we are about to dive deep into the heart of variation.

R squared, that mystical number, reveals how much of the variation in mouse size can be explained by mouse weight. It’s like a hidden treasure, waiting to be uncovered. The formula for r squared compares the variation around the mean with the variation around our fitted line.

But let’s not rush into the details just yet. First, we calculate the average mouse size and shift our focus solely to this dimension. We draw a black line to represent this average, emphasizing its significance. It serves as our anchor in the sea of data, guiding us towards understanding.

We then journey into the realm of squared residuals. These residuals represent the distances between the mean and each data point. By squaring them and summing them up, we capture a measure of the variation around the mean, or what we call the “sum of squares around the mean.”

But we’re not done yet. We return to our fitted line and measure the squared residuals once again. This time, we’re exploring the variation around our fitted line, which we call the “sum of squares around the fitted line.” With each rotation, we continue to sum up these squared residuals, unraveling the secrets of variation.

The Magnificence of r Squared: Unveiling the Mysteries of Variation

As we journey deeper into the mysteries of r squared, let’s pause for a moment to ponder the true essence of variance. Variance, my dear friends, is the average sum of squares per mouse – a representation of the variation around a specific dimension.

Now, let’s reconnect with the original plot, capturing the sum of squared residuals around our fitted line. We refer to this as the “sum of squares around the fitted line,” representing the distances between the line and each data point squared.

By dividing this sum of squares by the sample size, we obtain the variation around the fit. Ah, but remember, variance is the average sum of squares per mouse. And in our quest to unlock the mysteries of variance, we discover that it equals the sum of squares around the fit divided by the sample size.

Think of it as a voyage through the depths of mathematical elegance. We decipher the wonders of variation, understanding its essence and significance. The variation around the fit becomes a beacon of hope, guiding us towards comprehending the intricate relationship between mouse weight and mouse size.

And here’s the revelation we’ve been waiting for: the variance around the fit represents the average of the sum of squares for each mouse in our dataset. It is the true essence of variation, a testament to the power of linear regression.

Exploring the Limitations of r Squared: When Mouse Weight Falls Short

But let’s not forget that the journey ahead is not without its challenges. There are instances when mouse weight fails to enhance our predictions of mouse size, leaving us perplexed and yearning for answers.

Imagine a scenario where mouse weight offers no reliable clues about a mouse’s size. It’s like sailing into uncharted waters, unsure of what lies ahead. In these moments, the variation around the mean remains equal to the variation around the fit, thwarting our attempts to uncover the mysteries of size prediction.

This brings us to the concept of r squared. In cases like these, r squared equals zero, signifying that mouse weight fails to explain any variation around the mean. It’s a reminder that there is still much to unravel in the intricate web of data analysis.

Beware the Lure of Extravagant Equations

In the quest for knowledge, we must also be wary of pitfalls along the way. The allure of complex equations, with their myriad of parameters, may tempt us into a never-ending maze of possibilities. But fear not, for least squares is our guiding light, illuminating the path to truth and simplicity.

As we expand our equation to include additional parameters, we must tread carefully. Each parameter magnifies the potential for random events to reduce the sum of squares fit, resulting in a better r squared. But we mustn’t be swayed by the allure of false promises, for a true understanding of the relationship between variables requires meaningful insights.

That’s why people report an adjusted r squared value, which takes into account the number of parameters. It’s like a filter that refines our understanding, ensuring that our findings stand the test of statistical significance.

Unveiling the Power of p-Value: A Statistical Quest

And now, my fellow explorers, we reach the climax of our journey – the p-value. This magical number holds the key to determining the statistical significance of our findings. It’s like a guiding star, illuminating the path to truth amidst a sea of uncertainty.

To calculate the p-value for r squared, we embark on a statistical quest. We generate random datasets and calculate the sum of squares around the mean and the fit. With each step, we plug these values into our equation for f, which holds the key to our p-value.

As we traverse this statistical landscape, we create a histogram, mapping the distribution of our f-values. Each random dataset reveals a new point on the histogram, adding depth to our understanding. And finally, we return to our original data, plugging in the numbers and calculating our own f-value.

At last, we have our p-value – the number of more extreme values relative to all the values we generated. It’s like a stamp of authenticity, validating the significance of our findings. With r squared and the p-value at our disposal, we can confidently navigate the choppy waters of data analysis.

Conclusion: A Journey of Exploration and Discovery

Congratulations, my fellow adventurers! You have successfully traversed the treacherous world of linear regression. Armed with the power of least squares, r squared, and the p-value, you are now equipped to unravel the secrets hidden within data.

But remember, our quest doesn’t end here. The realms of linear regression hold countless treasures, waiting to be discovered. So, join me on this perpetual journey of exploration and discovery. Together, we shall unlock the wonders of statistical analysis, one insight at a time.

And before we part ways, allow me to share a little secret with you – Techal. If you seek a haven of knowledge and expertise in the realm of technology, look no further than Techal. It’s a sanctuary for tech enthusiasts, offering a wealth of information and insights. So, be sure to venture forth and explore the wonders of Techal.

Until we meet again, my dear friends, keep seeking knowledge, challenging boundaries, and embracing the magic of data analysis. Farewell, and may your statistical endeavors be forever fruitful!