Welcome to the first article of the Techal brand’s series on Natural Language Understanding. In this article, we will explore the high-level goals and guiding hypotheses for understanding the meaning of words and how they are represented in text data.

Contents

The Power of Word Co-Occurrence

We begin by examining the concept of distributed word representations or vector representations of words. Imagine a large word by word co-occurrence matrix, where each row represents a word in a vocabulary and each column represents the same vocabulary. The cell values indicate the number of times each word appeared with another word in a text corpus.

Believe it or not, there is latent meaning in these co-occurrence patterns. Although extracting meaning from such an abstract space may seem challenging, we will discover how powerful it is for developing meaningful representations.

Let’s consider a small thought experiment. Suppose we have a lexicon of words labeled as either negative or positive for sentiment analysis. If we have additional information about how these words co-occur with the words “excellent” and “terrible” in a text corpus, we can make accurate predictions about the sentiment of new anonymous words. By leveraging this co-occurrence information, we can build a simple classifier or decision rule that performs well in predicting sentiment.

This experiment demonstrates the predictive power of co-occurrence patterns in a high-dimensional space. With vector space models containing hundreds or thousands of dimensions, we can potentially uncover vast amounts of information about meaning.

High-Level Goals

Our high-level goals in this article are to:

- Explore how vector representations encode the meanings of words.

- Introduce foundational concepts for vector space models (also known as embeddings) and their relevance in modern deep learning models.

- Provide valuable insights for assignments and original project work.

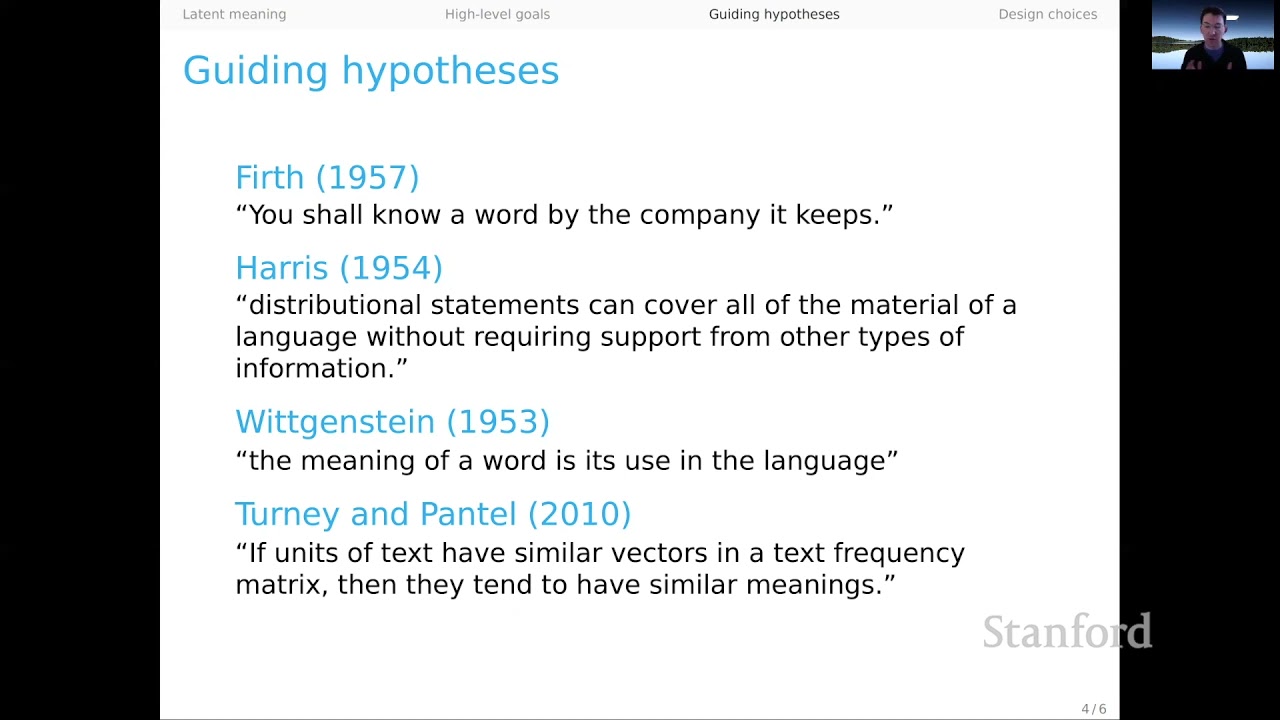

Guiding Hypotheses

To guide us in our exploration, we turn to the literature. The following hypotheses are central to our understanding:

- JR Firth: “You shall know a word by the company it keeps.” Trusting distributional information is crucial for linguistic analysis.

- Zellig Harris: “Distributional statements can cover all of the material of a language without requiring support from other types of information.” Usage information is a powerful tool in understanding language.

- Ludwig Wittgenstein: “The meaning of a word is its use in the language.” Wittgenstein’s view aligns with Firth and Harris, emphasizing the importance of distributional statements and co-occurrence patterns in determining meaning.

- Turney and Pantel: “If units of text have similar vectors in a text frequency matrix, then they tend to have similar meanings.” This hypothesis allows us to leverage co-occurrence matrices for making inferences about similarity of meaning.

Design Choices and Considerations

Designing effective word representations involves several choices and considerations. Here are some key aspects to consider:

- Matrix Design: Decide on the type of matrix design that suits your specific needs, such as word by word, word by document, or other variations. Each design captures different distributional facts.

- Text Grouping: Determine how you will group your text for co-occurrence analysis. This could be based on sentences, documents, or specific contexts like date, author, or discourse. Different groupings yield different notions of co-occurrence.

- Matrix Adjustment: Consider reweighting the count matrix to adjust values and uncover latent information about meaning. Various methods can accomplish this, which we will explore in future articles.

- Dimensionality Reduction: To capture higher-order notions of co-occurrence, you may choose to apply dimensionality reduction techniques. This step adds extra power to the model but presents additional choices.

- Similarity Metrics: Determine the chosen method for comparing word vectors, such as Euclidean distance, cosine distance, or Jaccard distance. The choice of similarity metric may depend on previous decisions made during the design process.

For those looking for a more streamlined approach, models like GloVe and word2vec offer packaged solutions, handling matrix design, weighting, and reduction steps. These models simplify the decision-making process and can deliver well-scaled vectors, making the choice of similarity metric less critical.

More recent contextual embedding models have even greater control over design choices, potentially starting from tokenization. These models provide unified solutions to navigate the decision space effectively.

It is worth noting that baseline models constructed from simpler approaches can often be competitive with more advanced models. The optimal combination of choices can be discovered through empirical discussion and experimentation.

FAQs

Q: What are distributed word representations?

A: Distributed word representations, also known as vector representations of words, capture the meanings of words based on their co-occurrence patterns in a text corpus. These representations encode semantic information within high-dimensional vector spaces.

Q: How are word representations useful in natural language understanding?

A: Word representations allow us to analyze and understand the meanings of words in a computational manner. By leveraging co-occurrence patterns, we can perform tasks such as sentiment analysis, document classification, and information retrieval more effectively.

Q: Do all word representations follow the same design choices?

A: No, design choices for word representations can vary depending on the specific goals and requirements of the task. Different matrix designs, text groupings, and dimensionality reduction techniques can yield different results. It is important to consider the specific context and desired outcomes when making these choices.

Conclusion

In this article, we have explored the high-level goals and guiding hypotheses for understanding word meanings through distributed word representations. We have also discussed the design choices and considerations that need to be made when working with co-occurrence matrices.

Understanding the nuances and power of word representations is crucial for advancing natural language understanding tasks. In the next articles, we will delve deeper into specific methods and techniques for creating meaningful representations and utilizing them in practical applications.

For more information and updates on the world of technology, visit Techal.

Image source: Unsplash