Welcome to an exhilarating journey into the world of AdaBoost! In this article, we will unravel the mysteries surrounding AdaBoost, a powerful ensemble learning algorithm that combines decision trees to make accurate classifications. Whether you’re a beginner or an expert, we will break down the concepts behind AdaBoost, delve into the nitty-gritty details of how it works, and explore its applications.

Understanding the Basics

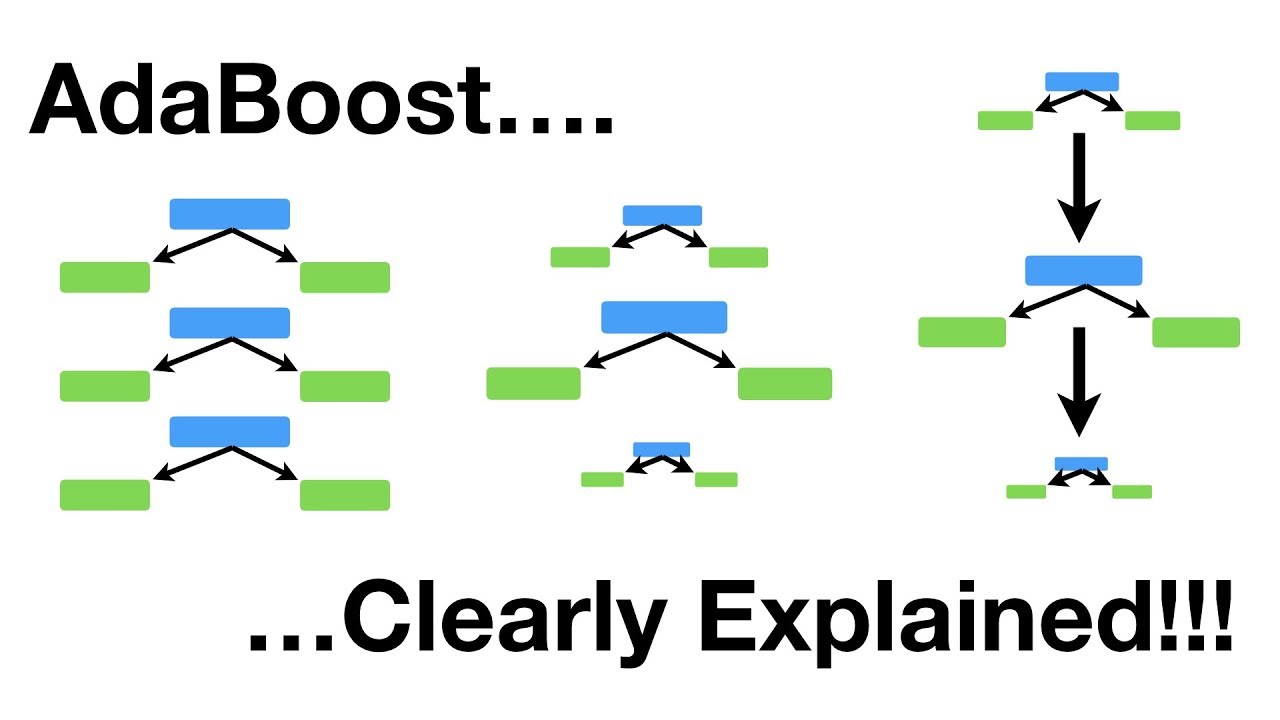

To comprehend AdaBoost, let’s first dive into decision trees and random forests, as they serve as the foundation for AdaBoost. In a random forest, each tree is a full-sized tree, allowing it to utilize multiple variables for decision-making. Conversely, in AdaBoost, the trees are often represented as stumps – a tree with just one node and two leaves. Stumps are called weak learners as they possess limited decision-making capabilities.

In a random forest, each tree has an equal say in the final classification. However, in an AdaBoost forest, some stumps hold more influence in the classification process than others. This distinction allows AdaBoost to prioritize accuracy by giving more voting power to stumps that perform well.

Furthermore, while trees in a random forest are constructed independently, the order in which stumps are created matters in an AdaBoost forest. The mistakes made by the previous stump guide the creation of subsequent stumps, fostering a stronger ensemble.

Unveiling the Power of AdaBoost

Now, let’s explore the inner workings of AdaBoost and how it creates a forest of stumps from scratch for accurate predictions. Imagine we want to predict if a patient has heart disease based on their chest pain, blocked artery status, and weight.

The first step is assigning weights to each sample, indicating their importance in accurate classification. Initially, all samples have the same weight, highlighting their equal significance. However, as stumps make mistakes, these weights will be modified.

Next, we create the first stump by selecting the variable (chest pain, blocked arteries, or weight) that best separates the samples. We calculate the Gini index for each stump and select the one with the lowest value.

Once the stump is chosen, we determine its influence in the final classification. The amount of say a stump has is based on its accuracy. Stumps with better classification performance receive higher amounts of say.

To guide the creation of subsequent stumps, we modify the sample weights. We increase the weight for incorrectly classified samples, emphasizing the need for accurate classification. Conversely, we decrease the weights for correctly classified samples, aiming for a balanced decision-making process.

After modifying the sample weights, we normalize them so they sum up to one. This normalization ensures that all samples collectively contribute to the classification process.

We repeat this process for each subsequent stump, taking into account the errors made by previous stumps. This iterative approach results in a forest of stumps that collectively make accurate classifications.

To make a final classification, the amounts of say from the stumps that predict “heart disease” and “no heart disease” are added up. The patient is then classified based on the larger sum.

FAQs

Q: Is AdaBoost only used with decision trees?

AdaBoost is commonly combined with decision trees due to their effectiveness in creating weak learners. However, AdaBoost can be used with other weak learners as well.

Q: Can you explain how sample weights influence the creation of subsequent stumps in more detail?

Certainly! Sample weights guide the creation of subsequent stumps by emphasizing the need for accurate classification. The weights of incorrectly classified samples are increased, while the weights of correctly classified samples are decreased. This modification ensures subsequent stumps pay more attention to the misclassified samples, ultimately leading to improved accuracy.

Q: What if a stump consistently misclassifies samples?

If a stump consistently misclassifies samples, it will receive a large negative amount of say. This negative value will influence the classification in the opposite direction, ensuring the stump’s errors do not affect the final result.

Conclusion

AdaBoost is a fascinating algorithm that combines weak learners to create a powerful ensemble classifier. By understanding the concepts behind AdaBoost and its iterative approach to learning, we gain insight into its ability to improve classification accuracy. As technology continues to evolve, AdaBoost remains a valuable tool in the field of machine learning, empowering us to make more accurate predictions.

To learn more about AdaBoost and explore other exciting topics in technology, visit Techal. Stay tuned for more captivating content that will fuel your passion for all things tech!