Have you ever wondered how 3D models of objects and scenes are created? One approach that has gained popularity is Depth from Defocus, a technique that leverages the blurriness of images to determine the depth of objects in a scene. In this article, we will explore the concept of Depth from Defocus and how it enables the generation of accurate 3D reconstructions.

Contents

Understanding Depth from Defocus

Depth from Defocus is based on the observation that the blurriness of an image can provide valuable information about the depth of a scene. Instead of focusing on when a scene point comes into focus, Depth from Defocus focuses on estimating how much a scene point is blurred. By determining the Point Spread Function (PSF) associated with each point in the scene, Depth from Defocus can accurately estimate the depth of the corresponding point.

To illustrate this concept, let’s consider a single image of a scene. Each point in the scene has a different level of blurriness, represented by the PSF. By estimating the PSF for each point, we can determine the diameter of the blur circle, denoted as “b.” Once we have the blur circle diameter, we can estimate the depth (or “o”) of the point in the scene.

The Process of Depth from Defocus

To implement Depth from Defocus, we typically capture two or more images of the same scene taken under different focused settings. By changing either the aperture or the position of the image sensor, we can generate images with varying levels of blur for each point in the scene.

Once we have the captured images, we can compute the ratio of the Fourier transforms of the PSFs associated with each image. This ratio provides valuable information about the relative blur of each point. By solving a set of equations, we can determine the values of the blur diameters (sigma1 and sigma2) and the focused image (f) that minimize the reconstruction error.

Advantages and Challenges

Depth from Defocus offers several advantages over traditional depth estimation techniques. It requires only two images, making it more efficient than other methods that may require a larger number of images. Additionally, Depth from Defocus can be implemented in real-time, allowing for dynamic 3D reconstructions.

However, Depth from Defocus is sensitive to noise, particularly in the high-frequency components of the images. High-frequency information is crucial for accurate depth estimation but is also prone to noise interference. Researchers have developed techniques to address this challenge, such as Reconstruction-Based Depth from Defocus, which minimizes the reconstruction error by optimizing the focused image and blur diameters.

Applications of Depth from Defocus

Depth from Defocus has found applications in various fields, including computer vision, robotics, and augmented reality. Its ability to quickly and accurately estimate the depth of objects in a scene makes it valuable for 3D modeling, object recognition, and scene understanding.

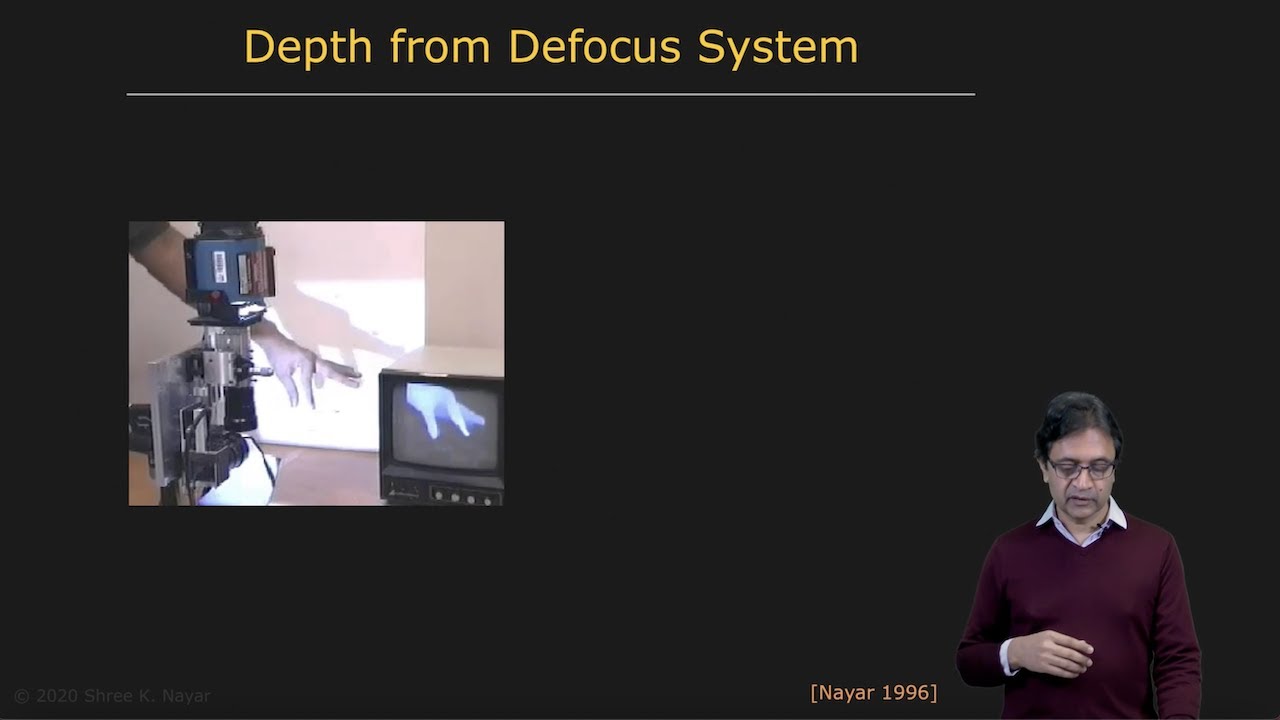

For example, Depth from Defocus has been used to reconstruct the 3D structure of human hands in real-time, enabling touchless hand gesture control. It has also been employed to create detailed 3D models of objects, enhancing product visualization for e-commerce platforms.

FAQs

Q: How many images are required for Depth from Defocus?

Most Depth from Defocus techniques require a minimum of two images captured under different focused settings. However, more images can be used to improve the accuracy of the depth estimation.

Q: Is Depth from Defocus sensitive to noise?

Yes, Depth from Defocus can be sensitive to noise, particularly in the high-frequency components of the images. The presence of noise in these frequencies can affect the accuracy of the depth estimation.

Q: What are the applications of Depth from Defocus?

Depth from Defocus has applications in computer vision, robotics, augmented reality, and more. It is used for tasks such as 3D reconstruction, object recognition, and scene understanding.

Q: Can Depth from Defocus be implemented in real-time?

Yes, Depth from Defocus can be implemented in real-time, allowing for dynamic 3D reconstructions. This makes it suitable for applications requiring quick and accurate depth estimation.

Conclusion

Depth from Defocus is a revolutionary approach to 3D reconstruction that leverages the blurriness of images to estimate the depth of objects in a scene. By analyzing the blur characteristics of different points in the scene, Depth from Defocus enables the creation of accurate 3D models. Despite its sensitivity to noise, Depth from Defocus has found wide-ranging applications in computer vision, robotics, and augmented reality, making it a valuable tool in the field of technology.

To learn more about Depth from Defocus and other exciting advancements in technology, visit Techal.