Welcome to part 2 of our series on Natural Language Understanding (NLU) and Information Retrieval (IR). In this installment, we will delve into classical IR and evaluation methods.

Contents

Ranked Retrieval: The Simplest Form of IR Task

In classical IR, one of the simplest tasks is ranked retrieval. This task involves providing a list of documents that are most relevant to a user’s query. The goal is to output a list of documents, sorted in decreasing order of relevance to the user’s query.

To accomplish this, we can use a term-document matrix, where each term-document pair has a corresponding cell that stores the number of times the term appears in the document. By applying reweighting techniques, we can compute the relevance score between a query and a document.

Term-Document Weighting Models in IR

When it comes to term-document weighting models in IR, two prominent intuitions come into play: frequency and normalization.

The frequency of a term in a document indicates its relevance. If a term occurs frequently in a document, it is more likely to be relevant for queries that include the term. On the other hand, if a term is rare, occurring in only a few documents, it can be seen as a stronger signal that the document is relevant for queries including that term.

Normalization plays a role in determining the strength of the term-document relationship. For instance, a shorter document might have a higher likelihood of containing relevant information related to a specific term.

One popular term weighting model is TF-IDF (Term Frequency-Inverse Document Frequency). In this model, the term frequency in a document is multiplied by the inverse document frequency, which is the logarithm of the ratio of the total number of documents to the number of documents containing the term. TF-IDF helps amplify important signals and deemphasize mundane and quirky ones.

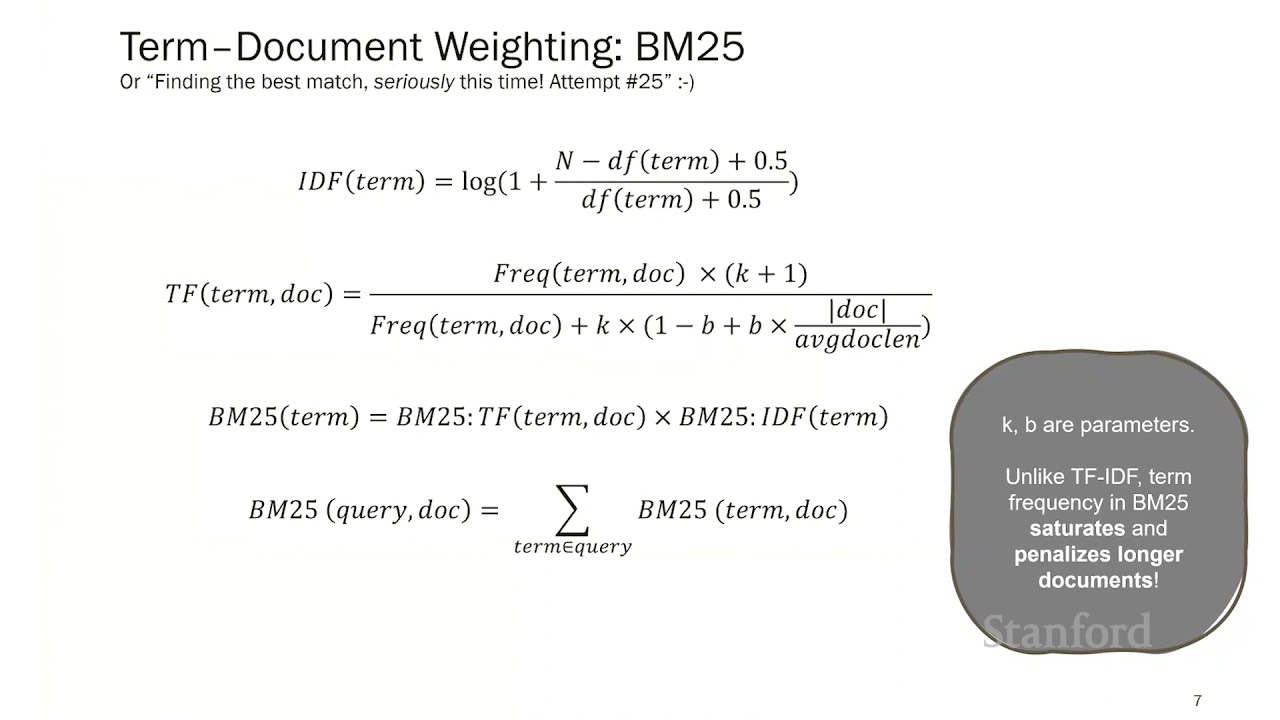

Another commonly used model is BM25 (Best Match 25), which is a stronger term weighting model compared to TF-IDF. BM25 saturates term frequency towards a constant value for each term and penalizes longer documents when counting frequencies. This model has proven to be highly effective in many IR applications.

Implementing IR Systems: The Role of Inverted Index

To implement an IR system, we need to consider the efficiency and effectiveness of the system. Efficiency is crucial, as we want retrieval models that work with subsecond latencies for collections that may contain millions of documents. Important factors to consider include latency, throughput, space requirements, scalability, and hardware needs.

The inverted index is a data structure that plays a key role in implementing an IR system. It maps each unique term in a collection to a posting list, which enumerates all the occurrences of the term in the documents. This data structure allows for efficient access to documents based on the terms.

Evaluation of IR Systems

An effective IR system must meet the users’ information needs. Evaluating the quality of an IR system can be more challenging than evaluating other machine learning tasks, as it involves ranking all the items in a corpus with respect to a query.

Efficiency metrics, such as latency, throughput, and hardware requirements, are important to consider. However, in this article, we will focus on IR effectiveness, which measures the quality of an IR system.

There are several metrics used to evaluate IR systems, including:

- Success@K: This metric assesses whether there is a relevant document in the Top-K results. It is useful when the user only needs one relevant result in the Top-K list.

- Mean Reciprocal Rank (MRR): MRR considers the position of the first relevant document in the ranking. It assigns higher weight to relevant documents in higher positions.

- Precision and Recall: Precision measures the fraction of retrieved items that are actually relevant, while recall measures the fraction of relevant items that are retrieved.

- Average Precision (AP): AP combines precision and recall, calculating the precision at each position in the ranking and averaging them.

- Discounted Cumulative Gain (DCG): DCG works with graded relevance, assigning higher relevance values to documents that appear higher in the ranking.

These evaluation metrics can be computed for individual queries and then averaged across all queries in the test collection.

Conclusion

Classical Information Retrieval (IR) relies on term-document weighting models like TF-IDF and BM25 to implement efficient and effective IR systems. The evaluation of these systems involves considering both efficiency and effectiveness metrics. By using various metrics such as Success@K, MRR, Precision, Recall, AP, and DCG, we can assess the quality of an IR system.

Stay tuned for the next installment where we will dive into Neural IR and explore state-of-the-art IR models that incorporate the principles of NLU.

Remember, for more insightful articles on technology, visit Techal.

FAQs

Q: What is the purpose of ranked retrieval in IR?

A: Ranked retrieval aims to provide users with a list of documents sorted in decreasing order of relevance to their query.

Q: Which term weighting models are commonly used in IR?

A: TF-IDF (Term Frequency-Inverse Document Frequency) and BM25 (Best Match 25) are popular term weighting models in IR.

Q: How do we evaluate the effectiveness of an IR system?

A: Evaluation metrics such as Success@K, MRR, Precision, Recall, AP, and DCG are used to assess the effectiveness of an IR system.

Q: What is the inverted index in IR?

A: The inverted index is a data structure that maps each unique term in a collection to a posting list, which enumerates all the occurrences of the term in the documents.

Q: What are some important factors in implementing an IR system?

A: Efficiency metrics (latency, throughput), space requirements, scalability, and hardware needs are important factors to consider in implementing an IR system.