Hello tech enthusiasts! Today, we will delve into the world of encoders and decoders and explore the challenges they present. In a previous video, we discussed how sequence-to-sequence learning with neural networks works, focusing on encoders and decoders. Now, let’s dive deeper into the problems associated with them.

Contents

The Issue at Hand

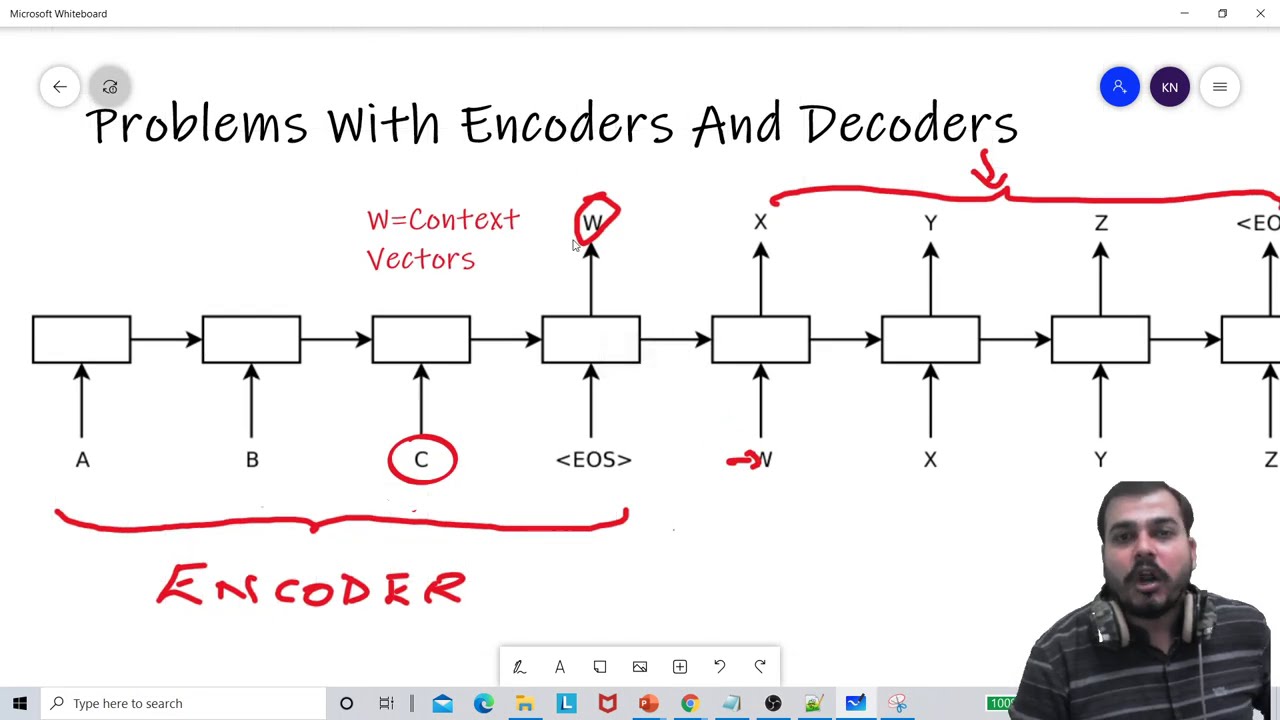

To begin, let’s recap the basic structure. In an encoder-decoder architecture, the encoder generates a vector (W) representing the input, which is then passed to the decoder. The decoder processes this vector and performs the decoding process.

However, researchers have encountered a significant problem when translating longer sentences. While translating from French to English, for example, the system performed well with smaller sentences but struggled with longer ones. The researchers found that the quality of translation decreased as the sentence length increased.

Introducing the Blue Score

To understand this problem better, researchers introduced a metric called the Blue Score. This score measures the quality of the translation, with higher scores indicating better accuracy. As sentence length increased, the Blue Score decreased, highlighting the issue with longer sentences.

The Difficulty with Capturing Information

So, what causes this decline in translation quality with longer sentences? The main culprit lies in the context vector (W) generated by the encoder. When sentences become longer, the context vector fails to capture the importance and essence of all the words present.

To illustrate, let’s consider the analogy of a human translator. If we were to task a human with memorizing and translating a hundred-word sentence, accuracy would decrease significantly. Similarly, the encoder’s context vector struggles to capture the significance of all the words in longer sentences.

The Solution: Attention Models

To overcome this problem, attention models come to the rescue. By using a bi-directional neural network, attention models introduce a window size concept. This window size determines the number of words the translator considers at a time.

The translator focuses on translating these window-sized chunks of words, gradually moving through the sentence. This approach mimics how a human translator would tackle a translation task.

The Promise of Attention Models

By adopting attention models, the translation system becomes more effective, even with longer sentences. The introduction of a bi-directional LSTM (Long Short-Term Memory) RNN (Recurrent Neural Network) helps capture the essential information from the input.

The attention model allows the decoder to align and translate the words accurately, resulting in improved translation quality. This method outperforms the traditional encoder-decoder approach, as evidenced by higher Blue Scores.

FAQs

Q: How do encoders and decoders perform with longer sentences?

A: Encoders and decoders typically struggle with longer sentences, leading to a decline in translation quality.

Q: What is the Blue Score?

A: The Blue Score measures the quality of translation, with higher scores indicating greater accuracy. Longer sentences usually lead to lower Blue Scores.

Q: How do attention models address the problems with encoders and decoders?

A: Attention models introduce a window size concept, allowing the system to focus on translating smaller chunks of words at a time. This approach improves translation accuracy with longer sentences.

Conclusion

Encoders and decoders have their limitations when it comes to translating longer sentences. However, through the adoption of attention models, these limitations can be overcome, resulting in more accurate and reliable translations.

Keep an eye out for our next video, where we will dive deeper into the intricacies of attention models and explore how they revolutionize the translation process. Don’t forget to subscribe to our channel for more informative content. Until next time, happy translating!