Neural networks have revolutionized the field of machine learning with their ability to learn complex patterns and make accurate predictions. However, training a neural network involves finding the optimal weights and biases, which can be computationally expensive. This is where the backpropagation algorithm comes into play.

Contents

What is Backpropagation?

Backpropagation is a breakthrough algorithm in the realm of neural networks that dramatically reduces the complexity of gradient descent, making it more efficient and tractable. It allows us to compute the derivatives of the cost function with respect to each parameter of the network without relying on finite differences.

A Simple Network

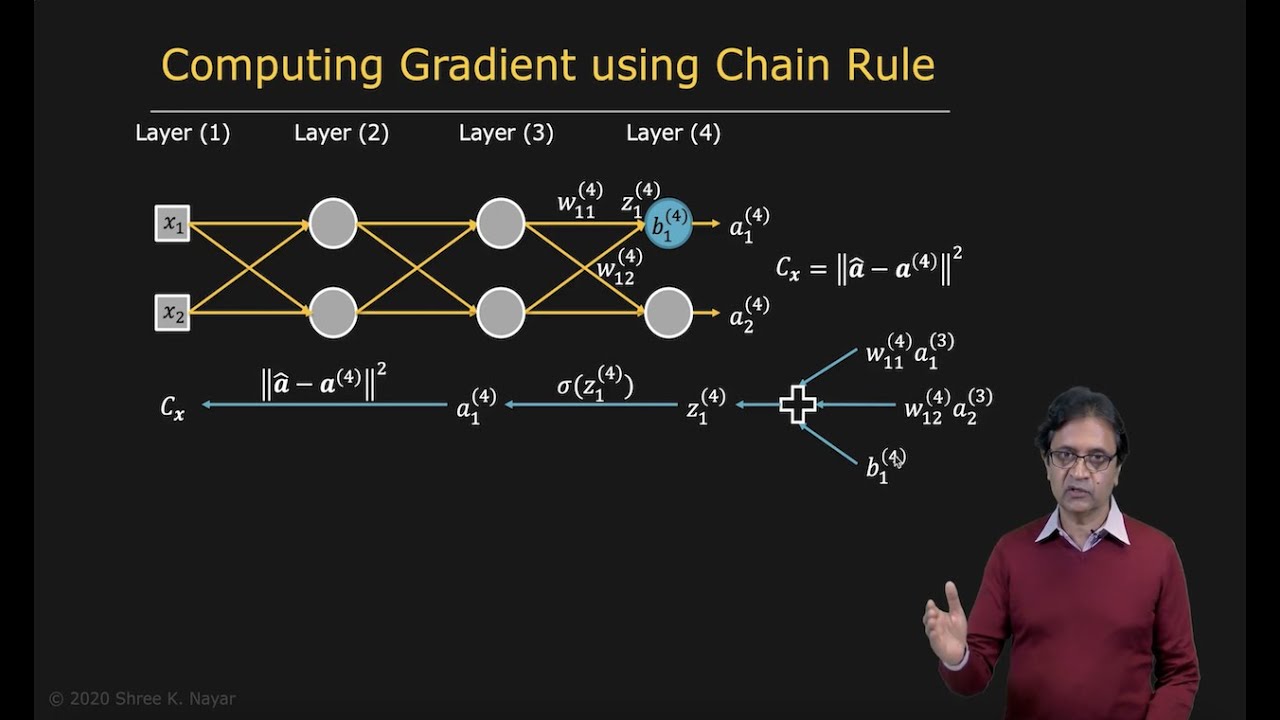

To understand how backpropagation works, let’s consider a simple neural network with two hidden layers and an output layer. We’ll focus on one neuron in the output layer for now.

Derivatives and Chain Rule

To find the derivative of the cost function with respect to a specific parameter, we apply the chain rule. By calculating the derivatives of the cost function with respect to the activation, the activation with respect to the weighted sum, and the weighted sum with respect to the parameter, we can find the desired derivative.

The Power of the Sigmoid Function

One advantage of using the sigmoid activation function is that its derivative can be expressed as the multiplication of the activation and 1 minus the activation. This simplifies the calculations in the backpropagation algorithm.

Computing Deltas and Gradients

The backpropagation algorithm involves calculating deltas, which are the derivatives of the cost function with respect to the weighted sum of the neurons in each layer. The deltas in the output layer can be computed directly using the chain rule. We then propagate these deltas back to the previous layers using the known weights and activations, allowing us to find the deltas for the entire network.

By using these deltas and the activations, we can compute the gradients of the cost function with respect to all the weights and biases of the network. These gradients provide us with the information we need to update the parameters using gradient descent.

The Backpropagation Algorithm

The backpropagation algorithm follows these steps:

- Initialize the network with random weights and biases.

- For each training image, compute the activations for the entire image.

- Compute the deltas for the output layer.

- Use backpropagation to compute the deltas for the previous layers.

- Compute the gradients of the cost function with respect to all the parameters.

- Average these gradients over all the training images.

- Update the weights and biases using gradient descent.

- Repeat steps 2 to 7 until the cost reduces below a predefined threshold.

Improved Complexity

The backpropagation algorithm significantly reduces the computational complexity compared to traditional gradient descent methods. For a single image, the number of computations for feedforward and backpropagation combined is roughly 48,000. When averaged over 60,000 images, the total number of computations for one iteration of gradient descent is approximately 2.8 times 10 to the power of 9, resulting in a substantial improvement over previous methods.

To learn more about neural networks and the backpropagation algorithm, visit Techal for informative articles and comprehensive guides.

FAQs

Q: What is the backpropagation algorithm?

A: The backpropagation algorithm is a technique used to train neural networks by efficiently computing the derivatives of the cost function with respect to the network’s parameters.

Q: Why is the sigmoid function advantageous in the backpropagation algorithm?

A: The sigmoid function’s derivative can be expressed as the activation multiplied by 1 minus the activation, simplifying the calculations in the backpropagation algorithm.

Q: What is the computational complexity of the backpropagation algorithm?

A: Compared to traditional gradient descent methods, the backpropagation algorithm reduces the computational complexity by a significant factor, making it more efficient and tractable.

Conclusion

The backpropagation algorithm is a powerful technique that enables efficient training of neural networks. By using the chain rule and the derivatives of the sigmoid function, we can compute the gradients of the cost function and update the network’s parameters using gradient descent. This algorithm has revolutionized the field of machine learning and continues to drive advancements in artificial intelligence.

Visit Techal to explore more articles and guides on the latest technology trends and developments.