In the realm of data science, entropy plays a crucial role in various applications. Whether it’s building classification trees, quantifying the relationship between two variables, or even implementing dimension reduction algorithms, entropy is a fundamental concept that helps us quantify similarities and differences.

Contents

A Closer Look at Surprise

Before diving into entropy, let’s explore the concept of surprise. Imagine we have two types of chickens, orange and blue, and we organize them into separate areas. If we randomly pick up a chicken, the probability of selecting an orange chicken is higher because there are more orange chickens. Conversely, picking up a blue chicken would be relatively surprising due to its rarity.

We can observe that surprise is inversely related to probability. When the probability is low, the surprise is high, and vice versa. This gives us a general intuition about how probability and surprise are connected.

Calculating Surprise with Probability

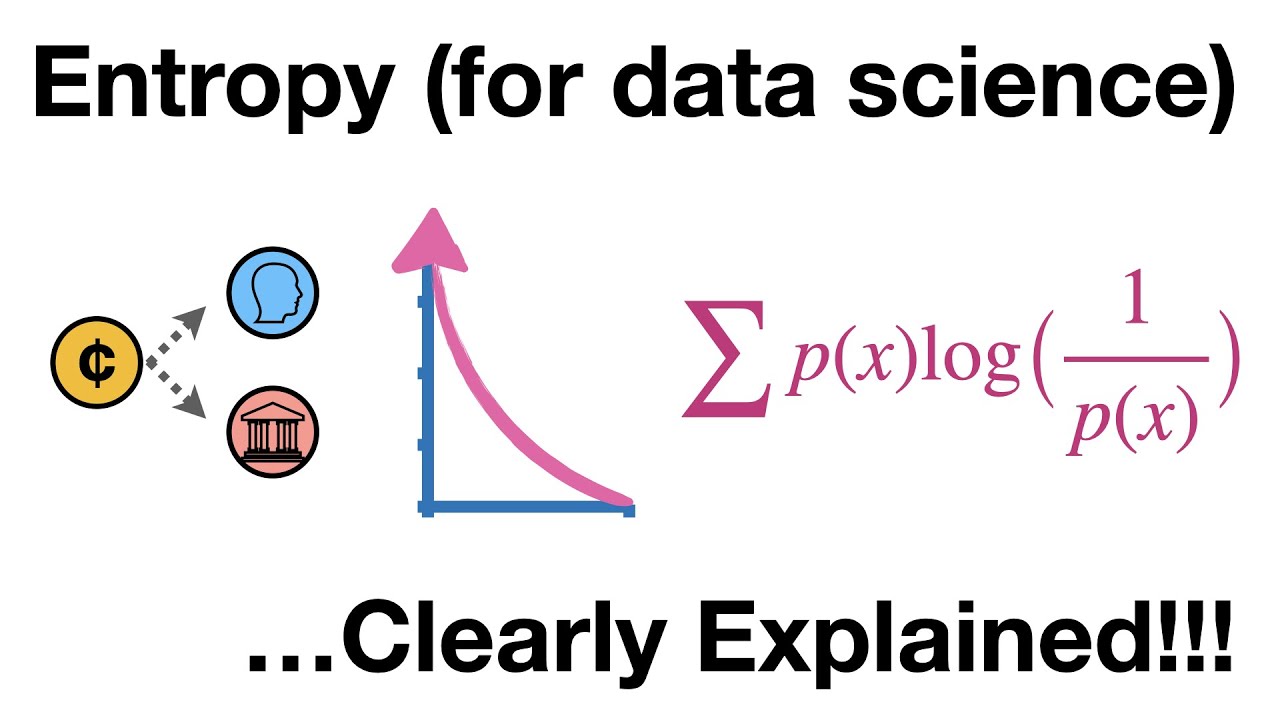

To calculate surprise, we can’t simply use the inverse of probability because it doesn’t capture the desired relationship. Instead, we use the logarithm of the inverse of probability. This ensures that when the probability is one, the surprise is zero, as expected. This logarithmic transformation also allows us to create a nice curve where the closer the probability is to zero, the higher the surprise.

Introducing Entropy

The expected value of surprise is known as entropy. It represents the average surprise we can expect per occurrence. To calculate entropy, we multiply each specific value of surprise by its corresponding probability and sum them up. This equation gives us the standard form of entropy, as published by Claude Shannon in 1948.

Entropy provides a measure of the surprise we would expect if we were to repeat a specific event multiple times. It quantifies the uncertainty or randomness in a system. A higher entropy indicates a higher level of uncertainty, while a lower entropy suggests a more predictable outcome.

Applying Entropy to Chicken Areas

Let’s apply the concept of entropy to our chicken example. We can calculate entropy for each area based on the probabilities of picking an orange or blue chicken. By plugging in the relevant values, we find that area A has an entropy of 0.59, area B has an entropy of 0.44, and area C has an entropy of 1.

These entropy values give us insights into the relative similarities and differences in the number of orange and blue chickens in each area. Higher entropy indicates a greater mix of chicken types, while lower entropy suggests a more homogeneous distribution.

FAQs

Q: How does entropy help in data science?

A: Entropy is a powerful tool in data science. It plays a key role in building classification trees, quantifying relationships between variables, and implementing dimension reduction algorithms, among other applications. By quantifying similarities and differences, entropy enables us to gain deeper insights into the data.

Q: Can you provide real-world examples of entropy in action?

A: Certainly! Entropy is used in various fields, such as natural language processing to determine the probability of next words in a sentence, bioinformatics to measure the diversity of genetic sequences, and in machine learning for decision tree construction.

Q: Is entropy the same as information gain?

A: No, but they are related. Information gain measures the reduction of entropy after a split in a decision tree, indicating how much information is gained by considering a specific attribute. Entropy provides the foundation for information gain calculations.

Conclusion

Entropy is a powerful concept that helps us quantify similarities and differences in data science. By understanding entropy, we can gain deeper insights into the uncertainty and randomness of a system. Whether you’re building classification trees or exploring the intricacies of machine learning, a solid understanding of entropy can take your data analysis skills to the next level.

For more insightful content on technology and data science, check out Techal, your go-to source for all things tech.